Rethinking Robots: Why Visual Representation of AI Matters

The images we use to depict AI, from robots, to blue brains and cascading code, are more than just clichés. They shape public understanding, feed myths and undermine meaningful engagement. Better Images of AI is working to change that.

By Audrey Hingle and Tania Duarte

We know images shape perception because of a large body of interdisciplinary research, including theories like the picture superiority effect, a phenomenon in which pictures and images are more likely to be remembered than words. That’s why, when it comes to artificial intelligence, the visuals we use to represent it matter. Whether they’re gleaming white robots, circuit-brain hybrids, or Matrix-style green code, these images can obscure the reality of how AI works, who builds it, and who is affected. In doing so, they limit public understanding and participation in AI and its regulation.

To explore why this matters, I spoke with Tania Duarte, founder of We and AI and convener of the volunteer-run project Better Images of AI. The initiative provides a growing collection of Creative Commons images designed to replace AI tropes and offer a more accurate, inclusive visual vocabulary for artificial intelligence. For those of us working in technology, policy, communications, journalism, or other related fields, our image choices aren’t just aesthetic, they shape how people understand and engage with AI.

Fantasy Visuals, Real-World Harms

Duarte’s primary role is as founder of We and AI, a nonprofit that works to improve public understanding of artificial intelligence and its impact on society. Duarte was trying to engage the public on facial recognition, algorithmic bias, and the expanding role of AI in everyday life. But again and again, she found the conversation stalled at the imagery. “When people think AI is a robot or a glowing blue brain, they disengage. They either feel fear, awe, or confusion—but not agency.”

These aren’t isolated responses. Duarte points to research by Dr. Kanta Dihal and others showing that these visuals reinforce harmful assumptions: that AI is incomprehensible, that it operates independently of human control, and that it belongs to a narrow group of developers: often white, male, and powerful.

“The visual language of AI tells people they’re not invited to participate,” Duarte says. “It signals that AI is beyond scrutiny or regulation, which plays right into the hands of those pushing hype over accountability.”

She is referring to how dominant visual tropes—like humanoid robots, glowing blue brains, and cascading code—frame AI as something autonomous, mystical, and detached from human decision-making. These images suggest that AI is either inherently intelligent or essentially unknowable. In both cases, it appears beyond human influence. That framing distances people from the systems that impact their daily lives, from hiring algorithms and predictive policing to recommendation engines and credit scoring.

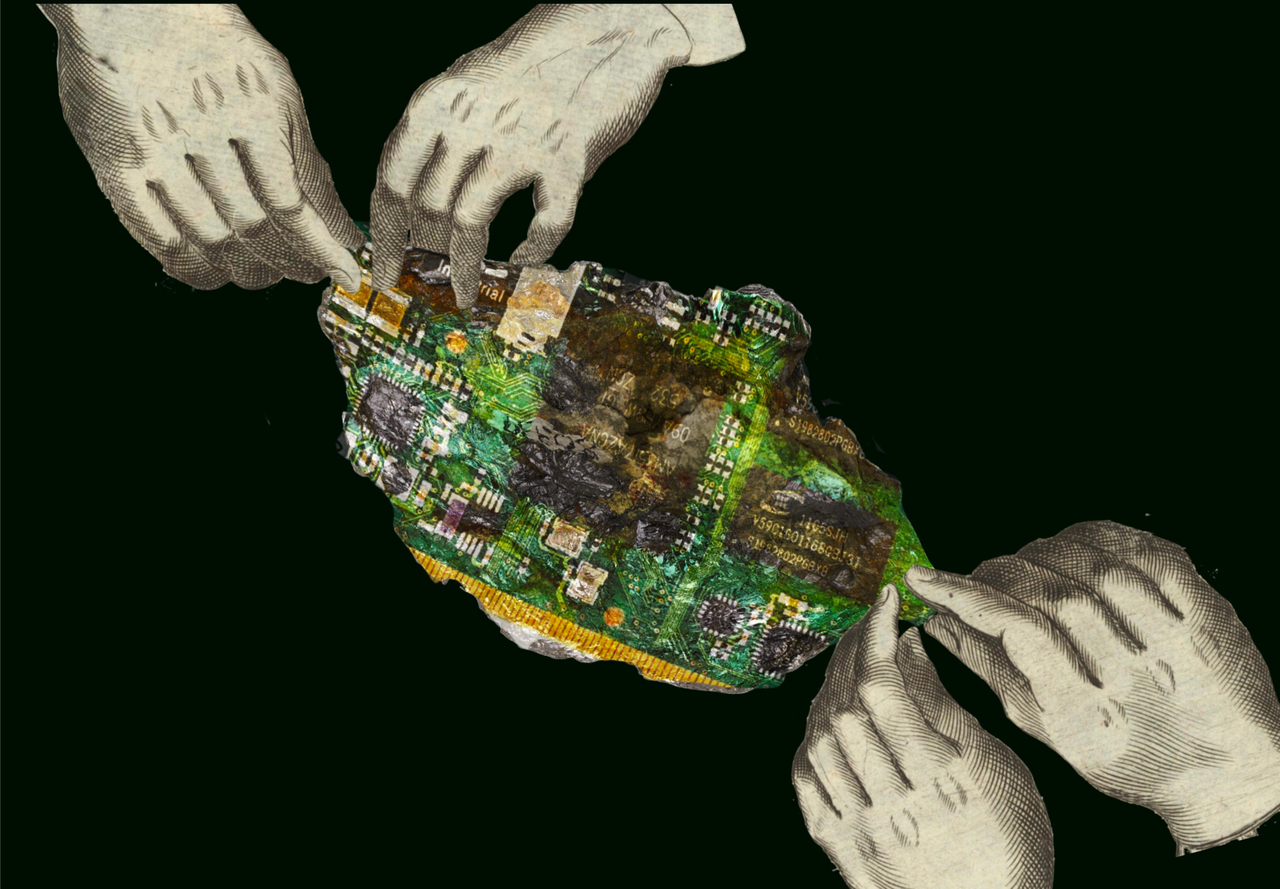

Duarte explains that these visuals do not just confuse the public. They actively disempower. If AI is shown as magical or godlike, why would anyone believe they can question it, much less intervene? She points to the frequent use of the robot hand reaching out to a human, a visual echo of Michelangelo’s Creation of Adam. This familiar image elevates the machine to divine status while casting people as passive, secondary, or replaceable.

At the same time, visuals often center white male executives, sterile sci-fi settings, and cold, blue tones. These choices send subtle cues about who belongs and who holds power. The result is a visual field that positions AI as the domain of a technical elite, and signals to everyone else that their role is limited to watching from the sidelines.

This dynamic has real consequences. When AI is presented as too complex, too advanced, or already inevitable, it becomes harder for the public to push for transparency or demand regulation. That makes it easier for companies to promote inflated claims about AI’s potential while resisting oversight. The fantasy becomes a buffer against accountability.

The Tropes To Watch For

Better Images of AI’s guide for users and creators outlines eight recurring visuals that often mislead, distract, or reinforce harmful assumptions:

- Descending code: This trope, often a direct reference to The Matrix, frames AI as dystopian and impenetrable. For those unfamiliar with the films, it can simply appear as a wall of cryptic symbols, reinforcing the idea that AI is beyond understanding.

- White robots: Depicting AI as pale humanoid robots implies that intelligence and power are white by default. This visual excludes the global majority and reinforces racial and ethnic biases within AI narratives.

- Variations on The Creation of Adam: Visuals showing a robotic and human hand nearly touching evoke religious imagery that casts AI as divine or mystical. This reinforces the idea that AI is beyond human agency or regulation and elevates developers to a godlike status.

- The human brain: While AI is sometimes loosely inspired by neural networks, most AI systems have little in common with human cognition. Equating AI with the brain suggests a false equivalence and encourages unrealistic expectations.

- White men in suits: This trope frames AI as being developed and controlled by a narrow demographic. It erases the contributions of less-visible workers like data labelers, technicians, and impacted communities, and centers narratives of power rather than accountability.

- The color blue: Blue has long been associated with progress and technology in the Global North, but in AI imagery it also conveys coldness, masculinity, and emotional distance. It subtly nudges viewers toward resignation rather than critical engagement.

- Science fiction references: Imagery borrowed from films like The Terminator or 2001: A Space Odyssey shapes public understanding far more than real-world systems do. These visuals blur fact and fiction, reinforcing fears and fantasies instead of grounded understanding.

- Anthropomorphism: Giving AI human-like features suggests that it can act independently or make decisions like a person. This masks the roles of designers, developers, and data sources, making it harder to assign accountability. It also invites harmful stereotypes when gender or race is projected onto machines.

Public Understanding Starts With Better Visuals

Why does this matter for governance? Because representation drives comprehension, and comprehension enables participation. Duarte is blunt: “If people don’t understand how AI systems are built or used, they can’t meaningfully weigh in on how they should be governed.”

She notes how even lawyers have fallen for the fantasy, citing fake case law generated by large language models. If trained legal professionals can be misled, what about policymakers, journalists, or the public at large?

The stakes are high. AI is shaping decisions about employment, healthcare, justice, and infrastructure. But if we keep showing it as a robot in a courtroom with a gavel, we obscure the real questions: How is this system trained? Who’s accountable for its outcomes? Who benefits, and who’s at risk?

Changing Visual Culture in a Generative Era

Stock photography rewards familiarity. The more often a trope is used, the more likely it is to be reused. And now, generative AI tools are reproducing, and often exaggerating, the same narrow visual cues. Duarte sees it getting worse: more gendered, more stereotyped, more decontextualized.

“Sparkles and magic wands are now icons for generative AI,” she says. “That tells people it’s effortless, enchanting, and beyond critique. But what we need is transparency, not enchantment.”

There’s no single image that can replace the current crop. Duarte argues for plurality, specificity, and visibility. Show the actual systems being used. Show the supply chains and workers: from data labelers to rare-earth miners. Show the communities impacted. And show the diverse people developing alternatives.

Toward A Richer Visual Landscape

Everyone who works on running and maintaining Better Images of AI is a volunteer. We and AI manages and subsidises it through other non-profit work, as curating and documenting creative approaches to AI takes significant time and effort. Contributors include artists, researchers, educators, and activists who share a common belief: that visuals shape narratives, and better narratives can empower better choices. “We want images that reflect the reality of AI: that it’s built by people, shaped by choices, and open to challenge,” Duarte says. “If we can see that clearly, we’re more likely to govern wisely.” Better Images of AI is currently inviting participation from organisations and individuals in a few different areas. If you’d like to get involved, you can contact them using the form on their website.

Elsewhere in the IX community...

Re-Imagining Cryptography and Privacy (ReCAP) Workshop

ReCAP was a free hybrid workshop from June 3–4, 2025 that took place physically at The City College of New York and virtually via Zoom. There were sessions on protest mobility, privacy behaviors under surveillance, and designing cryptographic tools that serve community needs. Systems of power, data justice, and the role of privacy in organizing were also discussed.

Keep an eye on the ReCAP 2025 page to revisit videos, artifacts, slides, etc. from this year, and visit the ReCAP24 page to see materials from 2024.

Support the Internet Exchange

If you find our emails useful, consider becoming a paid subscriber! You'll get access to our members-only Signal community where we share ideas, discuss upcoming topics, and exchange links. Paid subscribers can also leave comments on posts and enjoy a warm, fuzzy feeling.

Not ready for a long-term commitment? You can always leave us a tip.

This Week's Links

From the Group Chat 👥 💬

This week in our Signal community, we got talking about:

- Europe continues to worry about its tech stack.

- Microsoft denied cutting off services to the International Criminal Court (ICC) after the email account of its chief prosecutor, Karim Khan, was sanctioned by a Trump executive order and reportedly “disconnected.” https://www.politico.eu/article/microsoft-did-not-cut-services-international-criminal-court-president-american-sanctions-trump-tech-icc-amazon-google

- But did anything really change? Last week we shared a link that said a U.S. sanctions order forced Microsoft to cut off the International Criminal Court’s accounts.

- That is because Khan was individually sanctioned, the ICC was not sanctioned, and Microsoft, along with pretty much all tech companies, will "disconnect" any user the administration adds to the Office of Foreign Assets Control sanctions list.

- While Microsoft claims it’s only chief prosecutor Khan’s email that has been cut off, concerns that U.S. tech giants could pull services from European institutions remain.

- Elon Musk and messaging apps:

- Musk announced that XChat will launch "bitcoin-style encryption” https://www.businessinsider.com/x-elon-musk-xchat-encryption-audio-video-calls-file-sharing-2025-6

- What does that even mean? If he’s refeffering to blockchain, choosing a public ledger as the example of his encryption is more than a bit strange.

- Perhaps he’s referring to AES-256 (Advanced Encryption Standard) 🤷

- It was also noted that Telegram announced on X that it’s integrating Grok across all Telegram apps. Given that Telegram isn’t E2EE encrypted by default, that could mean a lot of training data for Grok and some rather serious privacy concerns.

- Will it actually happen? Who knows, Elon replied “No deal has been signed.”

Internet Governance

- Avri Doria argues that ICANN’s decision to delay the ATRT4 review and replace it with a staff-led process breaks its own rules and undermines its claim to be a bottom-up, community-led organization. https://m17m.is/is-icann-giving-up-its-claim-to-bottom-up-multistakeholder-process-legitimacy-b146fa82d3cd

- A federal judge has barred state officials from enforcing a Florida law that would ban social media accounts for young children. https://apnews.com/article/florida-social-media-ban-minors-lawsuit-678e71c6c6183b87435b7f51feb71fbe

- Even Republicans have started to worry about the controversial AI provision in Trump’s proposed budget bill that would block U.S. states from regulating AI for 10 years. https://www.platformer.news/state-ai-moratorium-republican-opposition-greene

- ARTICLE 19 urges Bangladesh to revise its draft data protection law to safeguard privacy and freedom of expression, warning it could otherwise enable censorship and unchecked surveillance. https://www.article19.org/resources/bangladesh-draft-data-protection-law-must-protect-freedom-of-expression

- The second Trump administration is using AI rhetoric to centralize power, dismantle public institutions, and serve the interests of tech elites argue Jacob Metcalf and Meg Young. https://www.techpolicy.press/tech-power-and-the-crisis-of-democracy

- Governments are increasingly using DNS resolvers as censorship tools, turning a vital part of internet infrastructure into a frontline for control warns Farzaneh Badiei. https://pulse.internetsociety.org/blog/why-dns-resolvers-are-the-next-internet-frontline

- Satellite internet services like Starlink may seem like a promising solution to politically motivated internet shutdowns, but without robust democratic governance, they risk introducing new forms of control like foreign surveillance, corporate power, and deepened digital inequality, argues Dinah van der Geest. https://www.thedailystar.net/opinion/views/news/freedom-the-sky-the-limits-satellite-internet-bangladesh-3910386

- The EU’s Disinformation Code of Practice is being mischaracterized by U.S. right-wing politicians and tech executives as authoritarian censorship argue Renee DiResta and Dean Jackson. https://www.lawfaremedia.org/article/regulation-or-repression--how-the-right-hijacked-the-dsa-debate

Digital Rights

- Coworker.org’s new report “Little Tech Goes Global” reveals how venture-backed startups are rapidly spreading AI-powered workplace surveillance across the Global South. https://home.coworker.org/little-tech-goes-global

- And in the news: Venture capital is fueling a global surge in workplace surveillance technologies, especially in countries with weak regulatory frameworks. https://restofworld.org/2025/employee-surveillance-software-vc-funding

- AI Now Institute's 2025 Artificial Power report lays out a bold, actionable strategy to help communities, policymakers, and the public reclaim control from powerful AI companies.https://ainowinstitute.org/publications/research/ai-now-2025-landscape-report

- Activists behind Chile’s child support law report facing a tech-facilitated backlash that targets mothers at the intersection of caregiving, activism, and the justice system. (Spanish) https://amarantas.org/2025/02/17/violencia-vicaria-digital-otra-forma-de-violencia-de-genero-facilitada-por-tecnologia

Technology for Society

- ISO/IEC 42005:2025 sets a new international standard to guide organisations in assessing the societal, ethical, and human impacts of AI systems throughout their lifecycle. https://www.iso.org/standard/42005

- Mozilla is shutting down Pocket, a favorite product of IX. Aram Zucker-Scharff shares 20 ideas for what he would do with Pocket if Mozilla gave it to him. (Could you please give it to him Mozilla?) https://aramzs.xyz/thoughts/20-things-i-would-do-with-pocket-if-they-gave-it-to-me

- Digg founder Kevin Rose has also offered to acquire Pocket. https://techcrunch.com/2025/05/23/digg-founder-kevin-rose-offers-to-buy-pocket-from-mozilla

- Duco’s new report outlines how human-generated datasets can mitigate AI risks in high-stakes, culturally sensitive contexts. https://www.ducoexperts.com/duco-human-generated-datasets

- Andrew Losowsky, journalist and product lead, reflects on what he’s learned from ten years of building and growing Coral, an open-source, community-first commenting platform used by newsrooms worldwide to foster healthier online conversations. https://www.linkedin.com/pulse/what-ive-learned-from-ten-years-coral-andrew-losowsky-7byie

- After a high-profile split, Meta and Oculus founder Palmer Luckey are partnering again to build combat AR/VR headsets for the U.S. Army through Luckey’s defense tech firm Anduril. https://www.wsj.com/tech/meta-army-vr-headsets-anduril-palmer-luckey-142ab72a

- Leaving a social media platform often forces content creators to start over from scratch on a new site. But what if that didn't have to be? Divyansha Segal on why decentralized social media matters. https://publicknowledge.org/why-decentralized-social-media-matters

Privacy and Security

- Researchers have discovered that Meta and Yandex are exploiting browser-to-app communication channels on Android to de-anonymize users’ web browsing data, linking it to their identities in native apps. https://arstechnica.com/security/2025/06/meta-and-yandex-are-de-anonymizing-android-users-web-browsing-identifiers

- Major cybersecurity firms including Microsoft, CrowdStrike, Palo Alto, and Google, are launching a shared glossary of hacker group nicknames to reduce confusion caused by inconsistent naming across the industry. https://www.reuters.com/sustainability/boards-policy-regulation/forest-blizzard-vs-fancy-bear-cyber-companies-hope-untangle-weird-hacker-2025-06-02

- Chipmaker giant Qualcomm released patches on Monday fixing a series of vulnerabilities in dozens of chips, including three zero-days. https://techcrunch.com/2025/06/03/phone-chipmaker-qualcomm-fixes-three-zero-days-exploited-by-hackers

- New study introduces WireWatch, a tool for assessing Android app network security, revealing that many top Mi Store apps use insecure proprietary encryption. https://www.computer.org/csdl/proceedings-article/sp/2025/223600d916/26hiVQjbZqE

- Apple disclosed that it has shared data with governments worldwide about thousands of push notifications, which can reveal users’ devices and, in some cases, include unencrypted content like the notification text. https://www.404media.co/apple-gave-governments-data-on-thousands-of-push-notifications

Upcoming Events

- The U.S. House Judiciary Subcommittee on Crime and Federal Government Surveillance will hold a hearing on the CLOUD Act, focusing on U.S.-UK data access agreements. Watch live, or watch the recording. June 5, 10:00am ET. Online. https://judiciary.house.gov/committee-activity/hearings/foreign-influence-americans-data-through-cloud-act-0

- FediForum is happening now, featuring talks on the future of the open social web, decentralized platforms, moderation, interoperability, and community-led innovation. June 5-7. Online. https://fediforum.org/2025-06

- The Atlantic Council’s Democracy + Tech Initiative is hosting a conversation on the importance of the Internet Governance Forum (IGF) and the role it has played for the past twenty years in providing an open, inclusive, and diverse space for discussing internet policy and governance. June 12, 9:00am ET

- Circuit Breakers is a gathering for all workers, organizers, and activists in the tech industry to come together and learn, build community, strategize, and recognize our collective power. October 18-19. New York, NY. https://techworkerscoalition.org/circuit-breakers

Careers and Funding Opportunities

United States

- Develop For Good: Lead Software Engineer (Tech Lead). San Francisco, CA https://job-boards.greenhouse.io/developforgood/jobs/4536358007

- Common Sense: Senior Director, Product - Education. San Francisco, CA. https://www.paycomonline.net/v4/ats/web.php/jobs/ViewJobDetails?clientkey=4AA7E7402507528EEEA6308617BB1F08&job=123660

- Strava: Group Product Manager, Trust & Safety. San Francisco, CA. https://job-boards.greenhouse.io/strava/jobs/6900604

- TechCongress: Applications for the January 2026 Fellowship are open! Washington, D.C. https://techcongress.io/apply

- Omidyar Network: Principal, Programs (Technology Policy). Washington D.C. https://job-boards.greenhouse.io/omidyarnetwork/jobs/6952324

- Lenovo: Senior Privacy and Data Governance Counsel. Morrisville, NC. https://jobs.lenovo.com/en_US/careers/JobDetail?jobId=67390#

- The Technical Projects Group: Senior Product Manager for an early-stage product in the democracy and civic engagement space. New York, NY. https://jobs.lever.co/schmidt-entities/382af065-b6ad-40a9-9716-a98e2bd7dd1f

- Project Liberty: Partnerships Program Associate. New York, NY. https://app.trinethire.com/companies/34219-project-liberty-llc/jobs/108575-partnerships-program-associate

- UNDP: Project Management and Community Engagement Specialist (Home based). New York, NY. https://estm.fa.em2.oraclecloud.com/hcmUI/CandidateExperience/en/sites/CX_1/job/26826

- Tech Coalition: Program Manager, Lantern. Remote U.S. https://www.technologycoalition.org/careers/program-manager-lantern

- Freedom of the Press Foundation: Engineering Manager, SecureDrop. Remote U.S. https://freedom.press/careers/job/?gh_jid=4571168005&gh_src=f03985445us

Remote & International

- The Compliant & Accountable Systems Group: Postdoc position in Responsible AI. Dortmund, Germany. https://rc-trust.ai/fileadmin/trustworthy-data-science-security/groups/singh/RespAI-Postdoc.pdf

- NVIDIA: Senior AI Safety Researcher. Tel Aviv OR Yokneam, Israel. https://nvidia.wd5.myworkdayjobs.com/en-US/NVIDIAExternalCareerSite/job/Israel-Tel-Aviv/AI-Safety-Researcher_JR1997755

- Proton: Senior Marketing Manager - New Products. Barcelona, Spain OR London, UK. https://job-boards.eu.greenhouse.io/proton/jobs/4601132101

- Thompson Reuters: Director - AI Governance and Validation. Zug, Zug, Switzerland. https://careers.thomsonreuters.com/us/en/job/JREQ191345/Director-AI-Governance-and-Validation

- Privacy International: Tech Advocacy Officer. London, UK. https://privacyinternational.org/opportunities/5594/tech-advocacy-officer

- Meedan: Contract Opportunity: Lead Software Engineer. Remote. https://meedan.bamboohr.com/careers/64

- Women on Web: Arabic-speaking Help desk member. Remote. https://www.womenonweb.org/en/page/22172/arabic-speaking-help-desk

Opportunities to Get Involved

- Critical infrastructure lab is calling for papers for The Politics of AI: Governance, Resistance, Alternatives. July 2025. https://www.criticalinfralab.net/preview/?id=1164

What did we miss? Please send us a reply or write to editor@exchangepoint.tech.

Comments ()